Top Level Await

Reference Materials

Top-Level Await Stage 3 Draft Proposal-May 28, 2021

Top-Level Await Proposal GitHub-May 28, 2021

Standard Proposal

Proponents: Myles Borins, Yulia Startsev

Authors: Myles Borins, Yulia Startsev, Daniel Ehrenberg, Guy Bedford, Ms2ger, etc.

Status: Stage 4

Synopsis

TLA enables modules to work like large async functions:

Through TLA, ECMAScript Module(ESM) can wait for resources to load, which causes any other modules that import these TLA modules to wait for the async module execution in the TLA module to complete before starting to execute the main code of the TLA module.

Motivation

Limitations on IIAFEs

Before the TLA proposal, await could only be used inside async functions. This means that if there is an await in the top-level scope of a module, the logic code related to await must be encapsulated in an async function for execution:

// awaiting.mjs

import { process } from './some-module.mjs';

let output;

async function main() {

const dynamic = await import(computedModuleSpecifier);

const data = await fetch(url);

output = process(dynamic.default, data);

}

main();

export { output };Of course, the above pattern can also be immediately executed. This pattern is called Immediately Invoked Async Function Expression (IIAFE), which is a variant of the IIFE idiom:

// awaiting.mjs

import { process } from './some-module.mjs';

let output;

(async () => {

const dynamic = await import(computedModuleSpecifier);

const data = await fetch(url);

output = process(dynamic.default, data);

})();

export { output };This pattern is suitable when module loading is designed to perform work at some future time. However, the content exported by this module may be accessed by other logic before the async function completes:

If another module imports this module, it might see output as undefined. Or it might see it after it's initialized to the return value of process, depending on when the access occurs! For example:

// usage.mjs

import { output } from './awaiting.mjs';

export function outputPlusValue(value) {

return output + value;

}

console.log(outputPlusValue(100));

setTimeout(() => console.log(outputPlusValue(100)), 1000);Workaround: Export a Promise to represent initialization

Without the TLA feature, a Promise can be exported from the module, and other dependent modules will know that the exported content is ready after waiting for this Promise to complete. For example, the above module can be written like this:

// awaiting.mjs

import { process } from './some-module.mjs';

let output;

export default (async () => {

const dynamic = await import(computedModuleSpecifier);

const data = await fetch(url);

output = process(dynamic.default, data);

})();

export { output };Then, the module can be consumed by other modules as follows:

// usage.mjs

import promise, { output } from './awaiting.mjs';

export function outputPlusValue(value) {

return output + value;

}

promise.then(() => {

console.log(outputPlusValue(100));

setTimeout(() => console.log(outputPlusValue(100)), 1000);

});Legacy Problems

However, this approach still has some problems:

- Everyone needs to learn a specific protocol to find the correct

Promiseand wait for thePromiseto complete before safely accessing the exported data. - If consumers forget to follow this protocol, there may be potential issues due to race conditions. For example, the value of

outputcan sometimes be accessed normally, but sometimes it cannot. - In deep module dependency structures, since

Promiseis contagious, thePromiseneeds to be explicitly passed up at each step of the chain.

Avoiding the race through significant additional dynamism

To avoid the risk of forgetting to wait for the exported Promise before accessing the exported content, modules can choose to export a Promise that resolves to return an object containing all exported content:

// awaiting.mjs

import { process } from "./some-module.mjs";

export default (async () => {

const dynamic = await import(computedModuleSpecifier);

const data = await fetch(url);

const output = process(dynamic.default, data);

return { output };

})();

// usage.mjs

import promise from "./awaiting.mjs";

export default promise.then(({output}) => {

function outputPlusValue(value) { return output + value }

console.log(outputPlusValue(100));

setTimeout(() => console.log(outputPlusValue(100), 1000));

return { outputPlusValue };

});Although this pattern is sometimes recommended to developers encountering such problems on Stack Overflow, this is not an ideal solution. This approach requires extensive reorganization of the relevant source code to make it more dynamic, and requires putting a large amount of module code into .then() callbacks to safely use the imported data.

Compared to ES2015 modules, this is a clear regression in terms of static analysis, testability, ergonomics, etc. If there are deep dependency modules with await in the project, all modules that depend on it need to be reorganized to implement the above pattern.

Solution: Top-level await

TLA allows us to rely on the module system itself to handle all these Promises and ensure everything coordinates well. The above example can be simply written as:

// awaiting.mjs

import { process } from './some-module.mjs';

const dynamic = import(computedModuleSpecifier);

const data = fetch(url);

export const output = process((await dynamic).default, await data);

// usage.mjs

import { output } from './awaiting.mjs';

export function outputPlusValue(value) {

return output + value;

}

console.log(outputPlusValue(100));

setTimeout(() => console.log(outputPlusValue(100)), 1000);Before the await in awaiting.mjs completes, the main logic in usage.mjs will not execute, so race conditions are avoided by design. This is an extension of existing ES module behavior - if awaiting.mjs doesn't use TLA, then the main logic in usage.mjs won't execute until it's loaded and all its statements are executed.

Use Cases

Dynamic Dependency Pathing

const strings = await import(`/i18n/${navigator.language}`);This allows modules to use runtime values to determine dependencies. This is useful for development/production separation, internationalization, environment separation, etc.

Resource Initialization

const connection = await dbConnector();Similar to treating modules as resources, if the resource cannot be used, the module throws an exception.

Dependency Fallbacks

let jQuery;

try {

jQuery = await import('https://cdn-a.com/jQuery');

} catch {

jQuery = await import('https://cdn-b.com/jQuery');

}WebAssembly Modules

WebAssembly modules are logically compiled and instantiated asynchronously based on their importation. Some WebAssembly implementations do important work in both stages, which is best moved to another thread. To integrate with the javascript module system, they need to do the equivalent of TLA. For more details, see the the WebAssembly ESM integration proposal.

Semantics As Desugaring

Currently, a module's import operation is not considered complete until its dependency execution is complete, and its main logic cannot run. With await, this feature remains unchanged: main logic execution must wait for all dependent modules to execute before executing.

One way to understand this is to imagine each module exporting a Promise, waiting for all import statements before executing the rest of the module:

import { a } from './a.mjs';

import { b } from './b.mjs';

import { c } from './c.mjs';

console.log(a, b, c);This is roughly equivalent to:

import { a, promise as aPromise } from './a.mjs';

import { b, promise as bPromise } from './b.mjs';

import { c, promise as cPromise } from './c.mjs';

export const promise = Promise.all([aPromise, bPromise, cPromise]).then(

() => {

console.log(a, b, c);

}

);Each module a.mjs, b.mjs, and c.mjs will execute to the first await they encounter; then we wait for them all to recover and complete execution before continuing.

TLA Landing Status

To explore how TLA works in various build tools, consider the following example:

import { a } from './a';

import { b } from './b';

import { sleep } from './utils';

await sleep(1000);

console.log(a, b);

console.timeEnd('TLA');import { sleep } from './utils';

console.time('TLA');

await sleep(1000);

export const a = 124;import { sleep } from './utils';

await sleep(1000);

export const b = 124;export const sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});For the above example, if the product is generated by ESM Bundlers (i.e. rollup, esbuild, bun, rolldown), the output is as follows:

const sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

console.time('TLA');

await sleep(1000);

const a = 124;

await sleep(1000);

const b = 124;

await sleep(1000);

console.log(a, b);

console.timeEnd('TLA');// utils.js

var sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

// a.js

console.time('TLA');

await sleep(1e3);

var a = 124;

// b.js

await sleep(1e3);

var b = 124;

// main.js

await sleep(1e3);

console.log(a, b);

console.timeEnd('TLA');// utils.js

var sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

// a.js

console.time('TLA');

await sleep(1000);

var a = 124;

// b.js

await sleep(1000);

var b = 124;

// main.mjs

await sleep(1000);

console.log(a, b);

console.timeEnd('TLA');//#region src/tla/utils.js

const sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

//#endregion

//#region src/tla/a.js

console.time('TLA');

await sleep(1e3);

const a = 124;

//#endregion

//#region src/tla/b.js

await sleep(1e3);

const b = 124;

//#endregion

//#region src/tla/main.js

await sleep(1e3);

console.log(a, b);

console.timeEnd('TLA');

//#endregionRelevant Cases

bunbunwill compile TLA directly into the product without considering compatibility, only considering the runtime of modern browsers:

rolldownIn its official documentation also made corresponding statements:

At this point, the principle of supporting TLA in rolldown is: we will make it work after bundling without preserving 100% semantic as the original code.

Current rules are:

- If your input contains TLA, it could only be bundled and emitted with esm format.

- require TLA module is forbidden.

It can be seen that

rolldownhas not yet fully implemented the semantics ofTLA, and is similar to otherESM Bundlers, finally keeping theasync modulein serial loading.

From the above, for common ESM Bundlers (i.e. rollup, esbuild, bun, rolldown), the final product is just a flat processing of dependencies in order, without specially handling the runtime of ES2022 new features (TLA) for TLA modules, and the final output product does not do parallel loading of async module, just serial loading of async module, which changes the semantics of TLA.

According to the proposal, the above TLA module can be translated into the following way:

import { _TLAPromise as _TLAPromise_1, a } from './a';

import { _TLAPromise as _TLAPromise_2, b } from './b';

import { sleep } from './utils';

Promise.all([_TLAPromise_1(), _TLAPromise_2()])

.then(async () => {

await sleep(1000);

console.log(a, b);

console.timeEnd('TLA');

})

.catch(e => {

console.log(e);

});import { sleep } from './utils';

console.time('TLA');

export const _TLAPromise = async () => {

await sleep(1000);

};

export const a = 124;import { sleep } from './utils';

export const _TLAPromise = async () => {

await sleep(1000);

};

export const b = 124;After translation, if you pack it with ESM Bundlers (i.e. rollup, esbuild, bun), the product is as follows:

const sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

console.time('TLA');

const _TLAPromise$1 = async () => {

await sleep(1000);

};

const a = 124;

const _TLAPromise = async () => {

await sleep(1000);

};

const b = 124;

Promise.all([_TLAPromise$1(), _TLAPromise()])

.then(async () => {

await sleep(1000);

console.log(a, b);

console.timeEnd('TLA');

})

.catch(e => {

console.log(e);

});// utils.js

var sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

// a.js

console.time('TLA');

var _TLAPromise = async () => {

await sleep(1e3);

};

var a = 124;

// b.js

var _TLAPromise2 = async () => {

await sleep(1e3);

};

var b = 124;

// TLA.js

Promise.all([_TLAPromise(), _TLAPromise2()])

.then(async () => {

await sleep(1e3);

console.log(a, b);

console.timeEnd('TLA');

})

.catch(e => {

console.log(e);

});// utils.js

var sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

// a.js

var promise = async () => {

await sleep(1000);

};

var a = 124;

var _TLAPromise = promise;

// b.js

var promise2 = async () => {

await sleep(1000);

};

var b = 124;

// TLA.js

console.time('TLA');

Promise.all([_TLAPromise(), _TLAPromise2()])

.then(() => {

console.log(a, b);

console.timeEnd('TLA');

})

.catch(e => {

console.log(e);

});//#region src/tla/utils.js

const sleep = time =>

new Promise(resolve => {

setTimeout(resolve, time);

});

//#endregion

//#region src/tla/a.js

console.time('TLA');

const _TLAPromise$1 = async () => {

await sleep(1e3);

};

const a = 124;

//#endregion

//#region src/tla/b.js

const _TLAPromise = async () => {

await sleep(1e3);

};

const b = 124;

//#endregion

//#region src/tla/main.js

Promise.all([_TLAPromise$1(), _TLAPromise()])

.then(async () => {

await sleep(1e3);

console.log(a, b);

console.timeEnd('TLA');

})

.catch(e => {

console.log(e);

});

//#endregionAt this point, ESM Bundlers processing TLA modules follow the TLA specification. This is also what vite-plugin-top-level-await plugin does, temporarily alleviating the problem that ESM Bundlers cannot correctly handle the TLA specification.

Tools With TLA Features

webpack:The earliest build tool to implement the

TLAspecification iswebpack, just ensure theexperiments.topLevelAwaitconfiguration item is set totruewebpackversion5.83.0starts to enable this feature by default.And if

TLAis anesmmodule, then it can be compiled normally.nodeThe

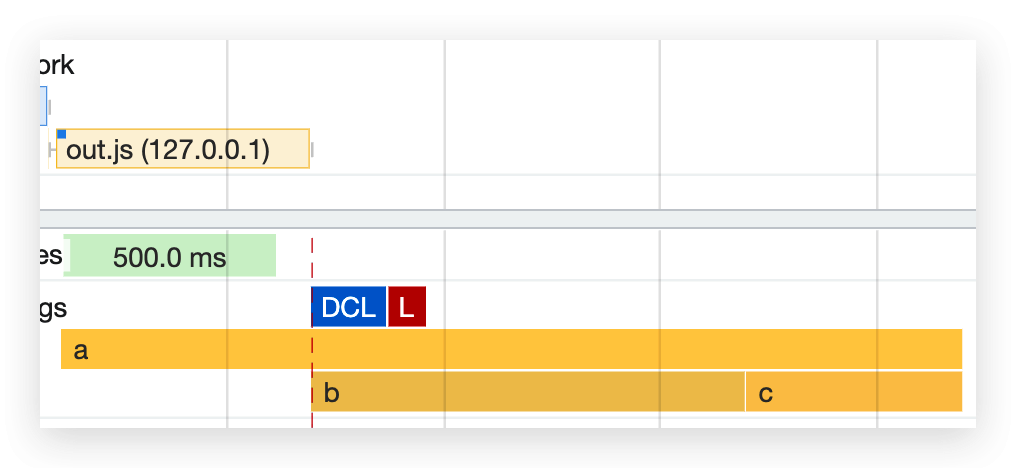

noderuntime implements theTLAspecification inesmprojects. However, the essence of thenoderuntime is different from the generalESM Bundlers, which does not execute the packaging process. The behavior of the runtime is similar to that of browsers.browsersToolChain Environment Timing Summary tscNode.js node esm/a.js 0.03s user 0.01s system 4% cpu 1.047 total b、c execution is parallel tscChrome

b、c execution is parallel es bundleNode.js node out.js 0.03s user 0.01s system 2% cpu 1.546 total b、c execution is serial es bundleChrome

b、c execution is serial Webpack (iife)Node.js node dist/main.js 0.03s user 0.01s system 3% cpu 1.034 total b、c execution is parallel Webpack (iife)Chrome

b、c execution is parallel

Summary

Although rollup / esbuild / bun / rolldown etc. esm bundlers tools can successfully compile the TLA module into es bundle, the semantics of the final product do not conform to the TLA specification. It is just a flat processing of TLA modules, causing the original TLA modules that can be executed in parallel to be executed in serial.

webpack achieves the semantics of TLA by compiling to iife, plus a complex webpack TLA Runtime, simulating the semantics of TLA. In other words, in the packaging process, webpack looks like the only bundler that can relatively correctly simulate the semantics of TLA.

The Principle Of Implementing TLA Specification In Webpack

To build TLA modules through webpack, the configuration information is as follows:

import path from 'path';

import { fileURLToPath } from 'url';

import { dirname } from 'path';

const __filename = fileURLToPath(import.meta.url);

const __dirname = dirname(__filename);

export default {

entry: './src/TLA.js',

output: {

filename: 'main.js',

path: path.resolve(__dirname, 'dist')

},

mode: 'production',

experiments: {

// Starting from `webpack` version 5.83.0, this feature is enabled by default

topLevelAwait: true

},

optimization: {

minimize: false

}

};You can see the output product information webpack-tla-output.js(including code comments).

Summary

TLA modules are contagious, and all dependent modules and all ancestor modules of dependent modules are also contagious. When executing a TLA module, it will DFS all sub-dependent modules as usual (require or import). The difference is that for TLA modules, special initialization is performed through __webpack_require__.a, ensuring that the resolve operation of the current module will not start until all sub TLA modules are resolve completed. After the current module resolve is completed, the current module can continue to execute the main logic of the current module.

The essence of webpack implementing TLA is to simulate the flow as follows, which is also the implementation of the above mentioned semantics-as-desugaring.

import { a } from './a.mjs';

import { b } from './b.mjs';

import { c } from './c.mjs';

console.log(a, b, c);import { a, promise as aPromise } from './a.mjs';

import { b, promise as bPromise } from './b.mjs';

import { c, promise as cPromise } from './c.mjs';

export const promise = Promise.all([aPromise, bPromise, cPromise]).then(

() => {

console.log(a, b, c);

}

);TLA modules expose promise to allow dependent modules to understand whether the TLA module has completed await operation. Through Promise.all, ensure that all dependent modules (including TLA modules and non TLA modules) can execute in parallel, while non TLA modules can execute in sequence.

FAQ

What exactly is blocked by a top-level await?

When a module imports another module, the importing module will not start executing its module logic until the dependent module has completed its main logic execution. If the dependent module encounters top-level await, then the await must complete before the importing module can start executing its logic.

Why doesn't top-level await block the import of an adjacent module?

If a module wants to declare itself dependent on another module (to wait for that module to complete its top-level await statement before executing the module body logic), it can declare this dependency relationship through import statement.

In the following cases, the print order will be X1 -> Y -> X2, because "importing" a module at the beginning of the sequence does not create an implicit dependency relationship.

import './x.mjs';

import './y.mjs';console.log('X1');

await new Promise(r => setTimeout(r, 1000));

console.log('X2');console.log('Y');It needs to be clear that declaring dependency relationships is to improve parallel possibility: most settings work that might be blocked by top-level await (such as the above examples) can be performed in parallel with other unrelated modules' settings work. When some work may be highly parallelizable (such as network requests), it is very important to queue these tasks as early as possible.

Setup Work

Setup work refers to the initialization tasks that need to be performed when a module is loaded. This usually includes the following operations:

When we use TLA (i.e., use await in the top-level scope of a module rather than in an async function), this implicitly tells the javascript runtime:

This setup work must be completed before the module can be considered fully loaded.

For example:

// database.js - database initialization

const config = await loadDatabaseConfig(); // load database configuration

const connection = await establishConnection(config); // establish connection

export const db = connection;// api.js - API client initialization

const apiKey = await loadApiKey(); // load API key

const client = await initializeApiClient(apiKey); // initialize API client

export const api = client;The key insight here is:

These setup operations are usually independent of each other, and there is no dependency between setup operations. In other words, according to the above examples, the database connection does not need to wait for the API client initialization to complete, and vice versa. By explicitly declaring these dependency relationships, we allow the javascript runtime to execute these operations in parallel, possibly saving a lot of initialization time.

What is guaranteed about code execution order?

The module maintains the same execution startup order as ES2015. If a module encounters await, it will yield control to allow other modules to initialize themselves in the order established by this explicit traversal order.

Specifically:

Whether TLA is used or not, the module always starts running in the post-order traversal order established in ES2015: the execution of the module body starts from the deepest import and proceeds in the order of reaching the import statement. After encountering top-level await, control is passed to the next module in this traversal order, or passed to other asynchronous scheduled code.

Do these guarantees meet the needs of polyfills?

Currently (in the absence of top-level await), polyfill is synchronous. Therefore, importing a polyfill (which modifies the global object) and then importing a module that should be affected by this polyfill after adding top-level await is still valid. However, if a polyfill contains top-level await, then the dependent module needs to import it to ensure it works.

Does the Promise.all happen even if none of the imported modules have a top-level await?

If the execution of a module is deterministic synchronous (that is, if it and its dependencies do not contain top-level await), then this module will not appear in Promise.all. In this case, it will run synchronously.

These semantics retain the current behavior of ES modules, that is, the evaluation stage is completely synchronous when top-level await is not used. These semantics are different from the use of Promise in other places. For specific examples and further discussion, please see issue #43 and issue #47.

How exactly are dependencies waited on? Does it really use Promise.all?

The semantics of a module without top-level await are synchronous: the entire dependency tree is executed in post-order, and a module will run after all dependencies are executed.

The same semantics also apply to a module containing top-level await: once a module containing top-level await is executed, it triggers synchronous execution of all dependencies that have been executed. If a module contains top-level await, even if this await is not actually executed at runtime, the entire module will be considered "asynchronous", like a large async function.

Therefore, any code that runs after it completes is in a Promise callback. However, from here, if multiple modules depend on it and these modules do not contain top-level await, then they will run synchronously without any Promise related work.

Does top-level await increase the risk of deadlocks?

Top-level await does indeed create a new deadlock mechanism, but the proponents of this proposal believe that this risk is worth it for the following reasons:

- There are already many ways to create deadlocks or prevent program execution, and developer tools can help debug them

- All considered deterministic deadlock prevention strategies are too broad and prevent suitable, practical, and useful patterns

Existing Ways to block progress

Infinite loop

jsfor (const n of primes()) { console.log(`${n} is prime}`); }Infinite recursion

jsconst fibonacci = n => (n ? fibonacci(n - 1) : 1); fibonacci(Infinity);Atomics.wait

jsAtomics.wait(shared_array_buffer, 0, 0);Atomicsallows blocking program progress by waiting for a never-changing index.export function then

js// a.mjs export function then(f, r) {}js// main.mjs async function start() { const a = await import('a'); console.log(a); }Exporting a

thenfunction allows blockingimport()

Summary

Ensuring program progress is a bigger problem

Rejected deadlock prevention mechanisms

When designing top-level await, a potential problem space is helping detect and prevent possible deadlock forms. For example, using await in loop dynamic imports may introduce deadlocks in module execution.

The following deadlock prevention discussion is based on this code example:

<script type="module" src="a.mjs"></script>await import('./b.mjs');await import('./a.mjs');Solution 1: Return partially filled module record in b.mjs, even if a.mjs is not completed, immediately resolve Promise, to avoid deadlock.

Solution 2: Throw exception in b.mjs when using unfinished module, refuse Promise when importing a.mjs, to prevent deadlock.

Case analysis: Both strategies encounter problems when multiple segments of code might want to dynamically import the same module. Such multiple imports usually do not cause any competition or need to worry about deadlock. However, both mechanisms cannot handle this situation well: one refuses Promise, the other cannot wait for the imported module to complete initialization.

Summary

No feasible deadlock avoidance strategy

Will top-level await work in transpilers?

It can work within the maximum possible range. The widely used commonjs (cjs) module system does not directly support top-level await, so any translation strategy for it needs to be adjusted. However, based on feedback and experience from several javascript module system authors (including translator authors), some adjustments have been made to the semantics of top-level await. The goal of this proposal is to implement it in such environments.

Without this proposal, module graph execution is synchronous. Does this proposal maintain developer expectations that such loading be synchronous?

It is yes within the maximum possible range. When a module contains top-level await (even if this await is not executed at runtime), it is not synchronous, at least needs to go through one Promise task queue. However, a module subgraph without top-level await will continue to run synchronously in the same way as before this proposal. And if several modules without top-level await depend on a module that uses it, then these modules will run all at once when the async module is ready, without giving control to other work (neither to Promise task queue/microtask queue, nor to the host event loop, etc.). For detailed information about the logic used, please see issue #74.

Should module loading include microtask checkpoints between modules, or yielding to the event loop after modules load?

Maybe should! These module loading problems are an exciting field in load performance research, as well as an interesting discussion about microtask checkpoint invariance. This proposal does not take a stance on these issues, but leaves asynchronous behavior to separate proposals. The host environment may package modules in a way that implements these features, and the top-level await specification mechanism can be used to coordinate these things. Future proposals in TC39 or host environments may add additional microtask checkpoints. Related discussions please see whatwg/html#4400.

Would top-level await work in web pages?

Yes. For detailed information on how to integrate into the HTML specification, please see whatwg/html#4352.

History

async/await proposal was initially submitted to the committee on 2014.01. In 2014.04 month's discussion, it was decided to keep await in the module goal for future implementation of top-level await. In 2015.07 month, the async/await proposal was promoted to stage 2. At this meeting, it was decided to postpone the standardization of top-level await to avoid hindering the current proposal because top-level await needs "to be designed with loaders".

Since the decision to postpone the standardization of top-level await, it has been mentioned many times in committee discussions, mainly to ensure it is still possible in the language.

In 2018.05 month, this proposal reached stage 2 in the TC39 process, and many design decisions (especially whether to block "sibling" module execution) were discussed during stage 2.

Implementations

- V8 v8.9

- SpiderMonkey enabled through javascript.options.experimental.top_level_await flag

- JavaScriptCore

- webpack 5.0.0